Understanding MSE (Mean Squared Error) in Depth

Mean Squared Error (MSE) is one of the most widely used metrics for evaluating regression models. It quantifies how wrong your predictions are on average, giving you a single number to assess model performance.

What is MSE?#

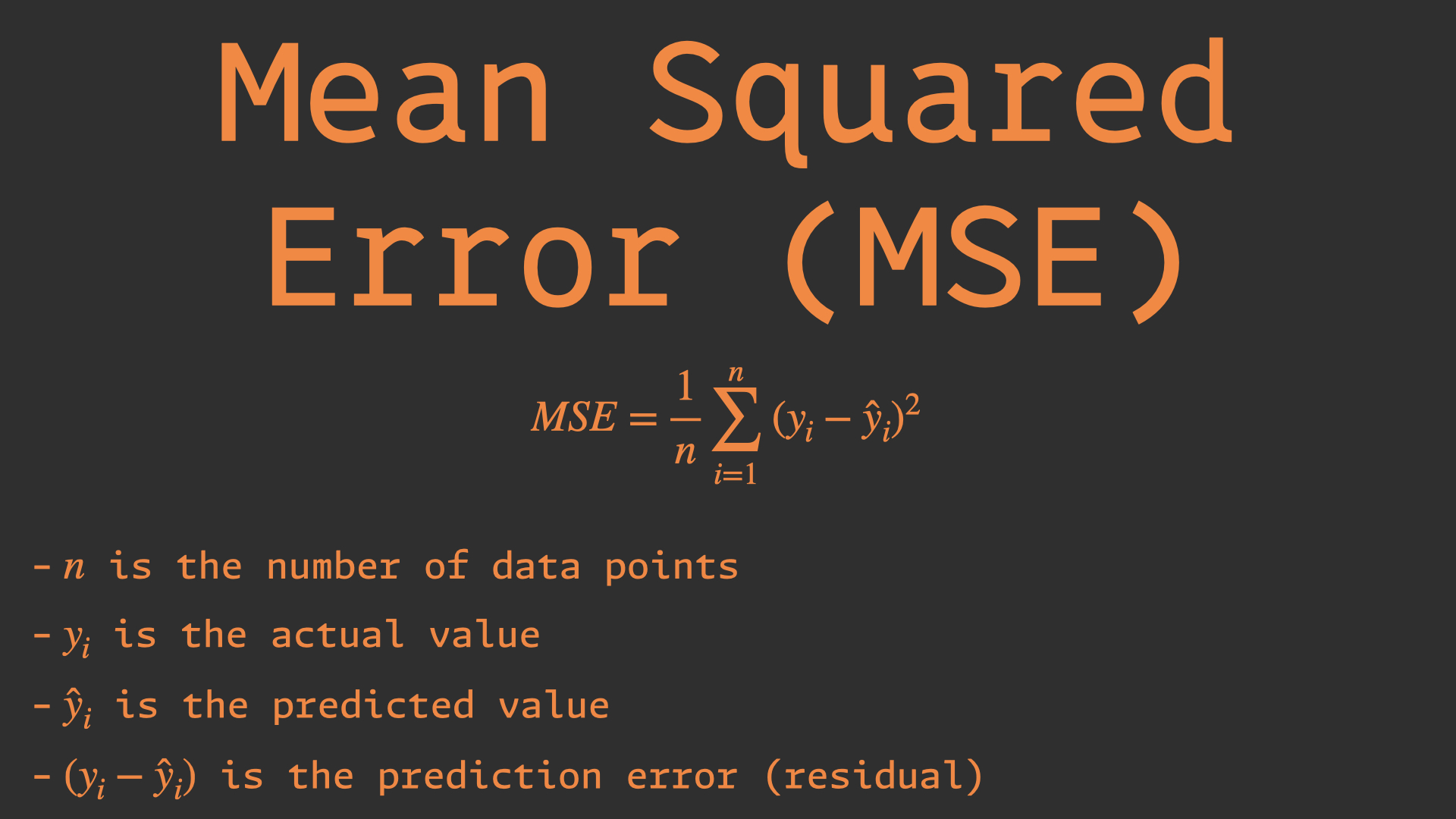

MSE calculates the average of the squared differences between predicted and actual values:

$$ MSE = \frac{1}{n} \sum_{i=1}^{n} (y_i - \hat{y}_i)^2 $$Where:

- $n$ is the number of data points

- $y_i$ is the actual value

- $\hat{y}_i$ is the predicted value

- $(y_i - \hat{y}_i)$ is the prediction error (residual)

Why Square the Errors?#

Squaring serves three critical purposes:

- Makes all errors positive: Without squaring, positive and negative errors would cancel out

- Penalizes large errors more: A prediction off by 10 units contributes 100 to MSE, while two predictions off by 5 units contribute only 50 total

- Mathematical convenience: Squaring makes the function differentiable everywhere, crucial for optimization

Implementing MSE from Scratch#

import numpy as np

def calculate_mse(y_true, y_pred):

"""

Calculate Mean Squared Error from scratch

Args:

y_true: Array of actual values

y_pred: Array of predicted values

Returns:

MSE value

"""

# Calculate squared differences

squared_errors = (y_true - y_pred) ** 2

# Return the mean

return np.mean(squared_errors)

# Example usage

y_actual = np.array([100, 200, 300, 400, 500])

y_predicted = np.array([110, 190, 295, 410, 480])

mse = calculate_mse(y_actual, y_predicted)

print(f"MSE: {mse:.2f}") # MSE: 145.00

# Compare with sklearn

from sklearn.metrics import mean_squared_error

mse_sklearn = mean_squared_error(y_actual, y_predicted)

print(f"MSE (sklearn): {mse_sklearn:.2f}") # MSE (sklearn): 145.00

MSE in the Training Process#

During training, linear regression minimizes MSE to find the best-fit line. This process is called Ordinary Least Squares (OLS):

import numpy as np

import matplotlib.pyplot as plt

# Generate sample data

np.random.seed(42)

X = np.random.rand(100, 1) * 10

y = 2 * X.squeeze() + 1 + np.random.randn(100) * 2

# Manual gradient descent to minimize MSE

def train_with_mse(X, y, learning_rate=0.01, epochs=1000):

# Initialize parameters

m = 0 # slope

b = 0 # intercept

n = len(X)

mse_history = []

for epoch in range(epochs):

# Predictions

y_pred = m * X.squeeze() + b

# Calculate MSE

mse = np.mean((y - y_pred) ** 2)

mse_history.append(mse)

# Calculate gradients

dm = -(2/n) * np.sum(X.squeeze() * (y - y_pred))

db = -(2/n) * np.sum(y - y_pred)

# Update parameters

m = m - learning_rate * dm

b = b - learning_rate * db

return m, b, mse_history

# Train the model

slope, intercept, mse_values = train_with_mse(X, y)

# Plot MSE over epochs

plt.figure(figsize=(10, 4))

plt.subplot(1, 2, 1)

plt.plot(mse_values[:100])

plt.xlabel('Epoch')

plt.ylabel('MSE')

plt.title('MSE Decreases During Training')

plt.subplot(1, 2, 2)

plt.scatter(X, y, alpha=0.5)

plt.plot(X, slope * X.squeeze() + intercept, 'r-', linewidth=2)

plt.xlabel('X')

plt.ylabel('y')

plt.title(f'Final Model (MSE: {mse_values[-1]:.2f})')

plt.tight_layout()

plt.show()

Interpreting MSE Values#

MSE is in squared units of your target variable, which can be hard to interpret:

# Example: House price prediction

actual_prices = np.array([200000, 300000, 400000, 500000, 600000])

predicted_prices = np.array([210000, 290000, 405000, 495000, 615000])

mse = mean_squared_error(actual_prices, predicted_prices)

rmse = np.sqrt(mse)

print(f"MSE: ${mse:,.0f}") # MSE: $95,000,000

print(f"RMSE: ${rmse:,.0f}") # RMSE: $9,747

# RMSE is more interpretable: predictions are off by ~$9,747 on average

MSE vs Other Metrics#

from sklearn.metrics import mean_squared_error, mean_absolute_error, r2_score

# Dataset with an outlier

y_true = np.array([100, 200, 300, 400, 1000]) # 1000 is an outlier

y_pred = np.array([110, 190, 310, 390, 500]) # Model predicts 500

mse = mean_squared_error(y_true, y_pred)

mae = mean_absolute_error(y_true, y_pred)

print(f"MSE: {mse:.0f}") # MSE: 50120

print(f"MAE: {mae:.0f}") # MAE: 124

# MSE is heavily influenced by the outlier

# Individual errors: [10, 10, 10, 10, 500]

# Squared errors: [100, 100, 100, 100, 250000]

Key Differences:

- MSE: Sensitive to outliers, differentiable everywhere, used in training

- MAE: Robust to outliers, not differentiable at zero, easier to interpret

- R²: Normalized metric (0-1 scale), shows proportion of variance explained

When MSE Might Mislead#

- Scale Dependency: MSE depends on the scale of your data. This doesn’t mean one model is worse—it’s just that MSE depends on the measurement scale, so you can’t directly compare across different units.

# Same model, different scales

celsius = np.array([0, 10, 20, 30, 40])

fahrenheit = celsius * 9/5 + 32

# Add same relative error (10%)

celsius_pred = celsius * 1.1

fahrenheit_pred = fahrenheit * 1.1

mse_c = mean_squared_error(celsius, celsius_pred)

mse_f = mean_squared_error(fahrenheit, fahrenheit_pred)

print(f"MSE (Celsius): {mse_c:.2f}") # MSE (Celsius): 6.00

print(f"MSE (Fahrenheit): {mse_f:.2f}") # MSE (Fahrenheit): 52.72

# Same model quality, different MSE!

- Outlier Sensitivity: One bad prediction can dominate MSE

# Two models with same MAE, different MSE

model1_errors = np.array([5, 5, 5, 5, 5]) # Consistent small errors

model2_errors = np.array([1, 1, 1, 1, 21]) # One large error

# Mean Absolute Error (MAE)

mae1 = np.mean(np.abs(model1_errors))

mae2 = np.mean(np.abs(model2_errors))

# Mean Squared Error (MSE)

mse1 = np.mean(model1_errors ** 2)

mse2 = np.mean(model2_errors ** 2)

print(f"Model 1 → MAE: {mae1:.1f}, MSE: {mse1:.1f}") # MAE: 5.0, MSE: 25.0

print(f"Model 2 → MAE: {mae2:.1f}, MSE: {mse2:.1f}") # MAE: 5.0, MSE: 89.0

# Same average absolute error, but MSE punishes the single large outlier much more.

Best Practices with MSE#

- Always use with other metrics: Don’t rely on MSE alone

- Consider RMSE for interpretation: Same units as your target

- Watch for outliers: Check if high MSE is due to a few bad predictions

- Normalize when comparing: Use R² when comparing models on different datasets

- Cross-validate: MSE on training data can be misleading

from sklearn.model_selection import cross_val_score

from sklearn.linear_model import LinearRegression

# Always cross-validate your MSE

model = LinearRegression()

cv_mse_scores = -cross_val_score(model, X, y, cv=5,

scoring='neg_mean_squared_error')

print(f"CV MSE: {np.mean(cv_mse_scores):.2f} (+/- {np.std(cv_mse_scores):.2f})") # CV MSE: 3.41 (+/- 0.63)

MSE in Production#

When deploying models, monitor MSE over time:

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

# Create sample data and train a model

np.random.seed(42)

X = np.random.rand(100, 1) * 10

y = 2 * X.squeeze() + 1 + np.random.randn(100) * 2

# Split data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train model

model = LinearRegression()

model.fit(X_train, y_train)

# Calculate baseline MSE

baseline_mse = mean_squared_error(y_train, model.predict(X_train))

print(f"Training MSE: {baseline_mse:.2f}")

def monitor_model_performance(model, X_new, y_new, threshold_mse=10):

"""

Monitor deployed model performance

Args:

model: Trained model

X_new: New feature data

y_new: New actual values

threshold_mse: Alert threshold

Returns:

Alert status and MSE

"""

predictions = model.predict(X_new)

current_mse = mean_squared_error(y_new, predictions)

if current_mse > threshold_mse:

print(f"⚠️ ALERT: MSE {current_mse:.2f} exceeds threshold {threshold_mse}")

print("Model may need retraining!")

else:

print(f"✓ Model performing well. MSE: {current_mse:.2f}")

return current_mse

# Simulate monitoring on test data

current_mse = monitor_model_performance(model, X_test, y_test, threshold_mse=5)

# Simulate degraded performance with noisy data

X_degraded = X_test + np.random.randn(*X_test.shape) * 0.5

y_degraded = y_test + np.random.randn(*y_test.shape) * 5

degraded_mse = monitor_model_performance(model, X_degraded, y_degraded, threshold_mse=5)

MSE remains the cornerstone metric for regression because it directly connects to the optimization process. While it has limitations, understanding MSE deeply helps you build better models and know when to use alternative metrics.